Mixed-Precision Training#

The CS system currently supports only mixed-precision for the training. Ensure that in your models you have:

16-bit input to arithmetic operations, and

FP32 accumulations.

Mixed-precision, when used in combination with Dynamic Loss Scaling, can result in speedups in training.

Data Formats#

The CS system supports the following data formats:

16-bit floating-point formats:

IEEE half-precision (binary16), also known as FP16.

CB16, a Cerebras 16-bit format with 6 exponent bits.

32-bit floating-point format:

IEEE single-precision (binary32), also known as FP32.

The 16-bit arithmetic uses 16-bit words and is always aligned to a 16-bit boundary.

The single-precision arithmetic uses even-aligned register pairs for register operands and 32-bit aligned addresses for memory operands.

Note

Memory is 16-bit word addressable. It is not byte addressable.

FP16#

The FP16 implementation follows the IEEE standard for binary16 (half-precision), with 5-bit exponent and a 10-bit explicit mantissa.

Sign: 1 |

Exponent: 5 |

Mantissa: 10 |

CB16 Half-Precision#

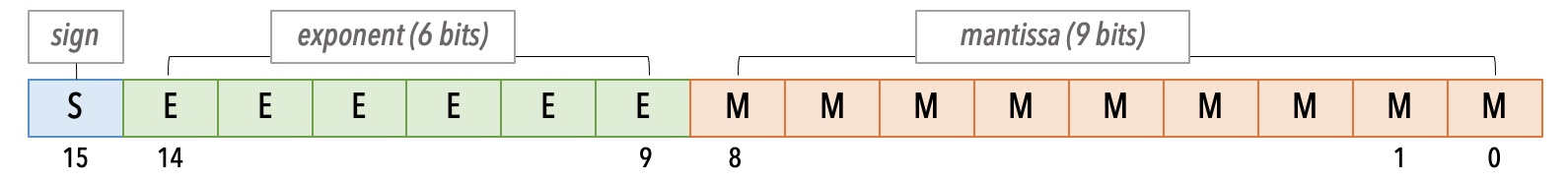

The CB16 is Cerebras’ 16-bit format, also referred to as cbfloat16. The CB16 is a floating-point format with 6-bit exponent and 9-bit explicit mantissa. This allows for double the dynamic range of FP16.

Cerebras CB16 Format#

With 1 bit more for the exponent compared to FP16, the CB16 provides a bigger range with the following benefits:

Denormals are far less frequent.

Dynamic loss scaling is not necessary on many networks.

Note

The cbfloat16 data format is different from the bfloat16 Brain Floating Point format.

Using CB16#

In your code, make sure the following two conditions are satisfied:

In the parameters YAML file, in the

csconfigsection, set the keyuse_cbfloat16toTrue.csconfig: ... use_cbfloat16: True

In your code, while constructing the

CSRunConfigobject, read the key-value pair foruse_cbfloat16from thecsconfiglist in the YAML file. See the following:... # get cs-specific configs cs_config = get_csconfig(params.get("csconfig", dict())) ... est_config = CSRunConfig( cs_ip=runconfig_params["cs_ip"], cs_config=cs_config, stack_params=stack_params, **csrunconfig_dict, ) warm_start_settings = create_warm_start_settings( runconfig_params, exclude_string=output_layer_name ) est = CerebrasEstimator( model_fn=model_fn, model_dir=runconfig_params["model_dir"], config=est_config, params=params, warm_start_from=warm_start_settings, )

Important

Ensure that the above both (1) and (2) conditions are true.

FP32 Single-Precision#

The FP32 is equivalent to IEEE binary32 (single-precision), with 8-bit exponent and 23-bit explicit mantissa.

Sign: 1 |

Exponent: 8 |

Mantissa: 23 |