Original Cerebras Installation#

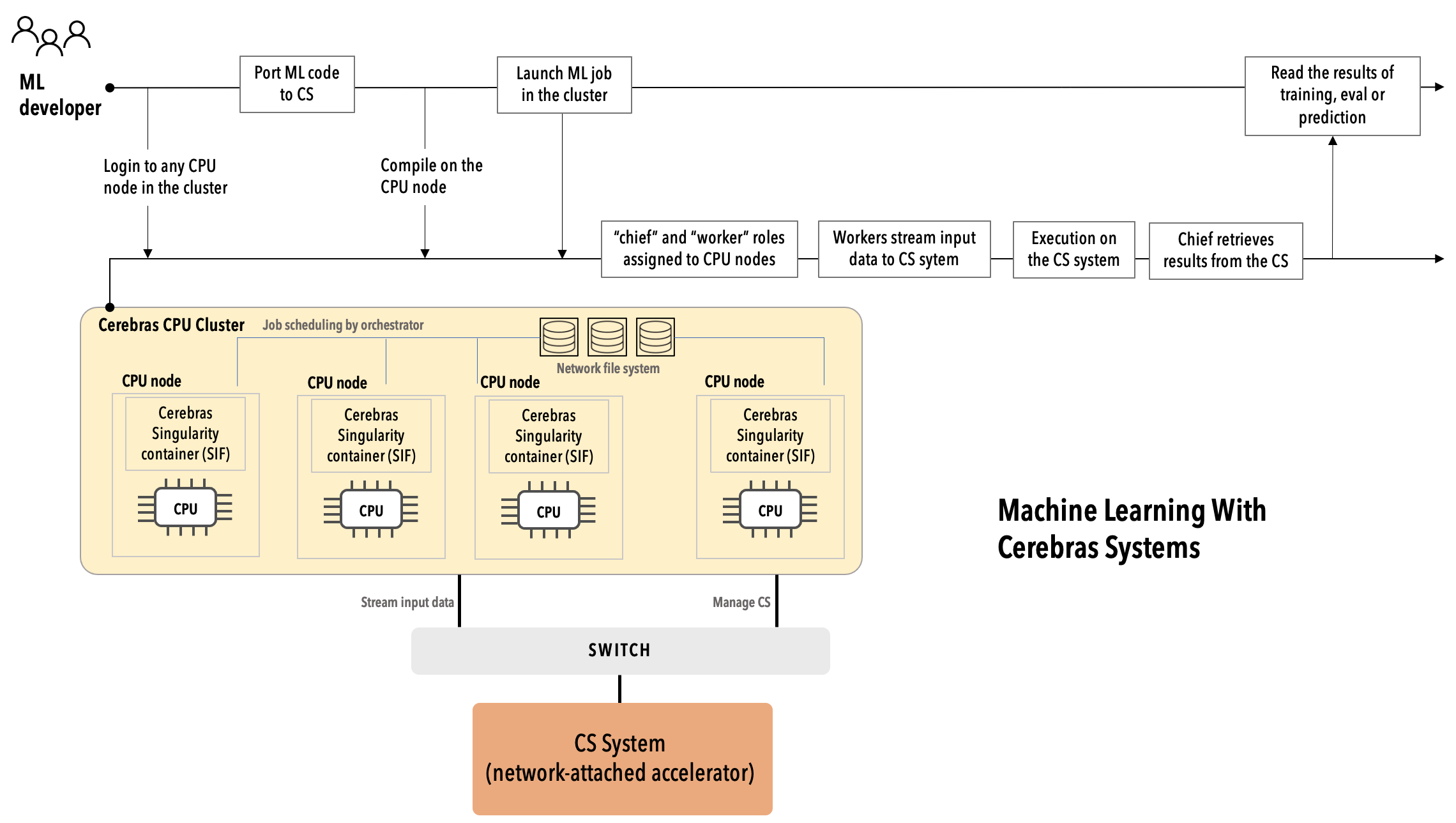

The Original Cerebras Installation is designed to train small and medium neural networks. The Original Cerebras Installation is composed by (see Fig. 13):

CS-2 system, powered by the Wafer-Scale Engine (WSE) dedicated to the core training and inference computations within the neural network. Each rack-mounted CS-2 contains one WSE. The system powers, cools, and delivers data to the WSE. For more information, visit WSE-2 datasheet, virtual tour of the CS-2, and the CS-2 white paper

CPU cluster. During runtime, the CPU nodes in the Original Cerebras Support Cluster are assigned two distinct roles: a single chief and multiple workers.

Chief node compiles the ML model into a Cerebras executable and manages the initialization and training loop on the CS system. Usually, one CPU node is assigned exclusively to the chief role.

Worker nodes handle the input pipeline and the data streaming to the CS system. One or more CPU nodes are assigned as workers. You can scale worker nodes up or down to provide the desired input bandwidth.

Fig. 13 User workflow in the Original Cerebras Installation#

All resource scheduling and orchestration is done using the orchestrator software Slurm that runs on the CPU nodes.

Warning

Cerebras is in the process of deprecating the Original Cerebras Installation and in the future will support only the Cerebras Wafer-Scale Cluster. If you run the Original Cerebras Installation, contact your Cerebras representative.