Train, Eval and Predict

On This Page

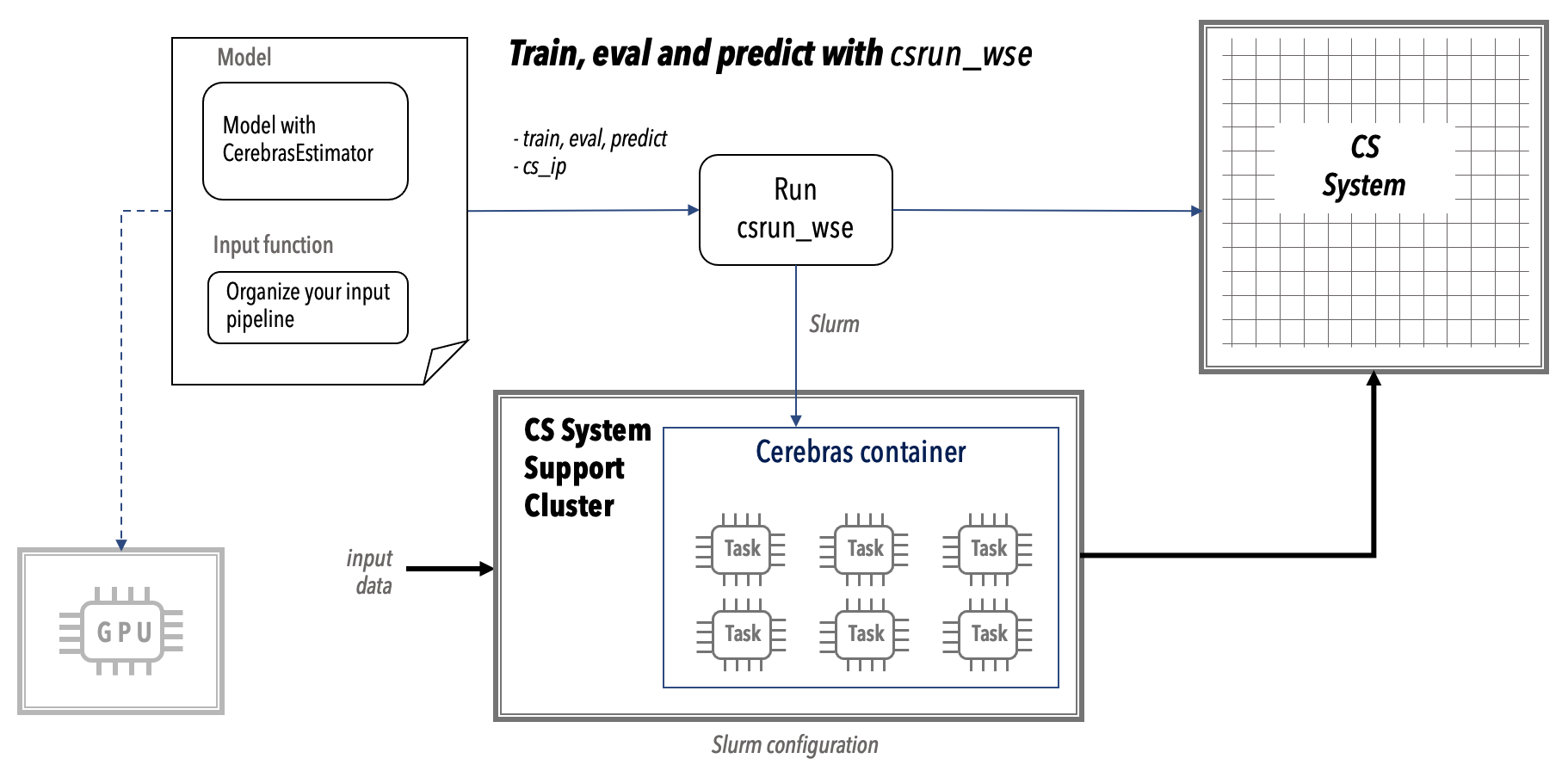

Train, Eval and Predict¶

This section describes how to:

Run training, eval or prediction on Cerebras system.

Without modifying your code, run training, eval or prediction on a CPU or a GPU.

You will use the csrun_wse script to accomplish this. See below.

See also

See The csrun_wse Script for detailed documentation on how to use this Bash script.

Examples¶

Train¶

The following command executes the user command python run.py --mode=train --cs_ip=0.0.0.0, which initiates model training on the CS system at the given cs_ip address.

csrun_wse python run.py --mode=train --cs_ip=0.0.0.0

The following command executes the user command python run.py --mode=train --cs_ip=0.0.0.0, which initiates training using the trained model on the CS system at the given cs_ip address. The Slurm orchestrator uses 3 nodes, 5 tasks per worker and 16 CPUs per task for this training run.

csrun_wse --nodes=3 --tasks_per_worker=5 --cpus_per_task=16 python run.py --mode=train --cs_ip=0.0.0.0

The following command executes the user command python run.py --mode train --params configs/your-params-file.yaml --model_dir your-model-dir --cs_ip 10.255.253.0, which initiates training on the CS system at the IP address: 10.255.253.0. If the Slurm variables are not passed to the csrun_wse script, then the values provided by system administrator in the csrun_cpu script are used. See Configuring csrun_cpu.

csrun_wse python run.py --mode=train \

--params configs/your-params-file.yaml \

--model_dir your-model-dir \

--cs_ip=10.255.253.0

Eval¶

csrun_wse python run.py --mode=eval --cs_ip=0.0.0.0

Executes the command

python run.py --mode=eval --cs_ip=0.0.0.0, which initiates model evaluation on the CS system at the givencs_ipaddress.

Predict¶

csrun_wse python run.py --mode=predict --cs_ip=0.0.0.0

Executes the command

python run.py --mode=predict --cs_ip=0.0.0.0, which initiates prediction using the trained model on the CS system at the givencs_ipaddress.

Note

Also note another advantage after you ported your TensorFlow code to Cerebras: You can run training, eval or prediction on either the CS system or on CPU- and GPU-based systems without recoding your model.

Train on CPU, GPU¶

On a CPU within the Cerebras cluster¶

To train on a CPU, do not use Slurm to invoke the standard Singularity container. Instead, simply launch the training command with cs_cpu. See the following example:

csrun_cpu python run.py

See also

See The csrun_cpu Script for detailed documentation on how to use this Bash script.

On a CPU or GPU outside the Cerebras cluster¶

To run on CPU or GPU outside of the CS system environment, you will not use the Cerebras container. However, you must use the version of CerebrasEstimator provided in the Reference Samples repo. Follow these steps:

Clone the Cerebras Reference Implementations repo.

Import the versions of

CerebrasEstimatorandRunConfigfrom the repo.Use these versions both within the Cerebras container and outside of the Cerebras environment to easily switch between the CS system and GPU.