CosineAnnealingWarmRestarts

CosineAnnealingWarmRestarts¶

class modelzoo.common.pytorch.optim.lr_scheduler.CosineAnnealingWarmRestarts (optimizer: torch.optim.optimizer.Optimizer, initial_learning_rate: float, T_0: int, T_mult: int, eta_min: float, disable_lr_steps_reset: bool = False)

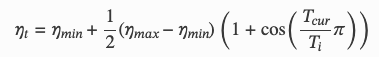

Set the learning rate of each parameter group using a cosine annealing schedule, where 𝜂𝑚𝑎𝑥 is set to the initial lr, 𝑇𝑐𝑢𝑟 is the number of steps since the last restart and 𝑇𝑖 is the number of steps between two warm restarts in SGDR:

When 𝑇𝑐𝑢𝑟=𝑇𝑖, set 𝜂𝑡=𝜂𝑚𝑖𝑛. When 𝑇𝑐𝑢𝑟=0 after restart, set 𝜂𝑡=𝜂𝑚𝑎𝑥.

It has been proposed in SGDR: Stochastic Gradient Descent with Warm Restarts.

- Parameters:

optimizer – The optimizer to schedule

initial_learning_rate – The initial learning rate.

T_0 – Number of iterations for the first restart.

T_mult – A factor increases Ti after a restart. Currently T_mult must be set to 1.0

eta_min – Minimum learning rate