Optimizing performance with automatic microbatching#

Overview#

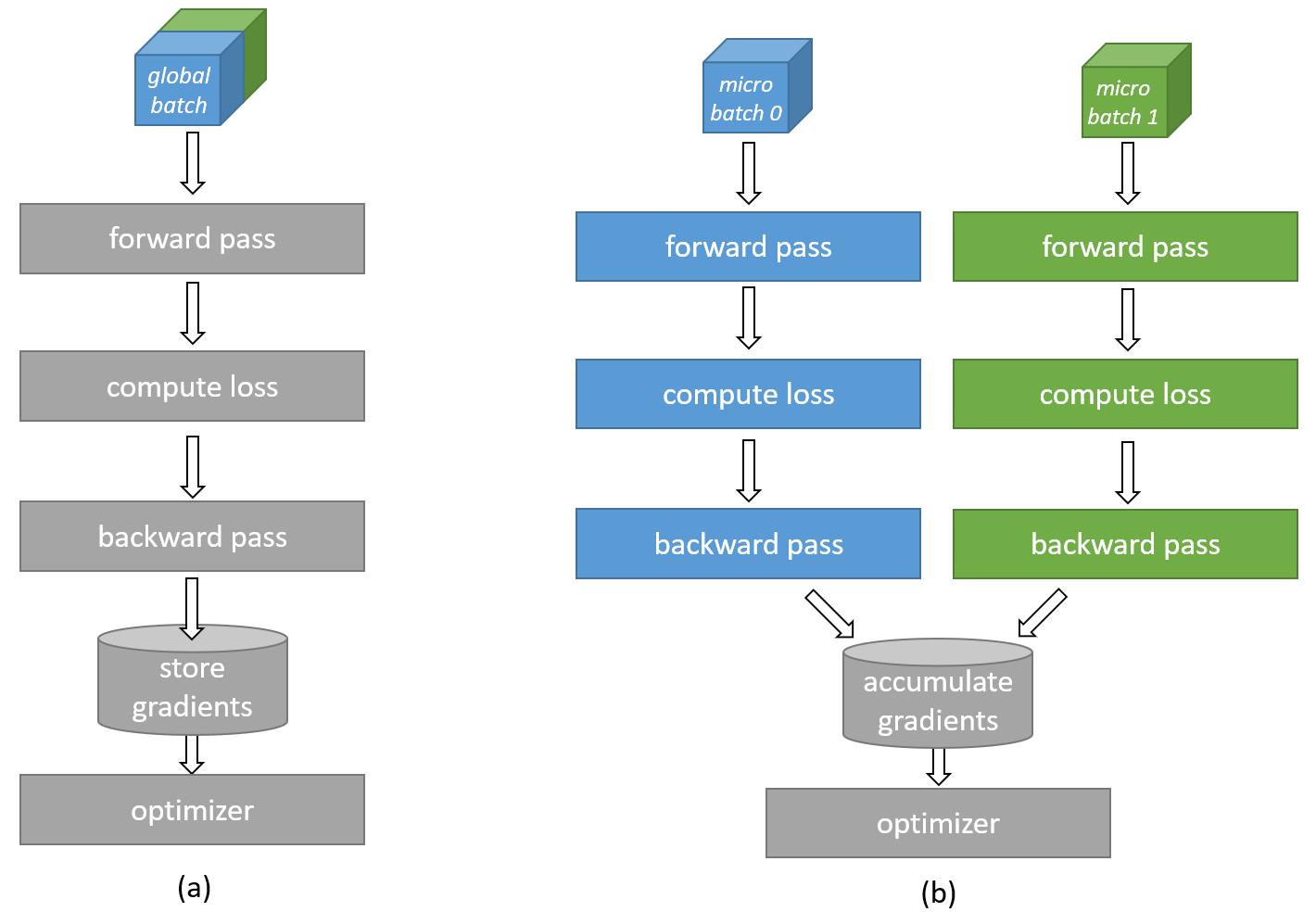

Microbatching is a technique that reduces per-system memory usage and improves run performance by dividing the global batch size into smaller sub-batches that can be run individually. The gradients from each microbatch are then accumulated before the weight update, so the model still operates on the total global batch size.

Here, we explain how to manually specify a microbatch size for your execution or leverage Cerebras’ automatic microbatching feature, which automatically identifies optimal microbatch values tailored to your model and configuration.

Key Terms and Parameters#

- num_csx

This YAML parameter specifies the number of Cerebras CS-X systems (e.g. CS-2s, CS-3s, etc) that are being used for the model run.

- batch_size

This YAML parameter specifies the global batch size of the model before the model is split along the batch dimension across

num_csxsystems or into micro batches.- Per-system batch size

This is a useful term, defined implicitly as

batch_size / num_csx, which represents the size of the batch that will be used on each Cerebras system.- micro_batch_size

This YAML parameter controls the micro-batch size that will be used on each Cerebras system.

Warning

The batch_size parameter must be evenly divisible by num_csx, and the per-box batch size batch_size / num_csx must be evenly divisible by micro_batch_size.

Background#

Splitting model training or evaluation along the batch dimension into smaller micro batches enables the model to run with larger batch sizes than can physically fit on available device memory. The Cerebras software stack supports Automatic microbatching for transformer models via the Gradient Accumulation feature, without requiring changes to model code. The Cerebras software can also automatically find performant values of micro-batch size.

As illustrated in Fig. 14, a batch size exceeding the device memory capacity can be divided into smaller micro batches. Each micro batch is computed separately, and the resulting gradients are accumulated across micro batches before the final update to the network weights occurs. Statistics like loss can be combined across micro-batches in a similar way. This way, Gradient Accumulation emulates a bigger batch size by running multiple smaller micro-batches and combining the results.

Fig. 14 Tiling and accumulation of gradients along the batch dimension.#

This behaviour is controlled via the micro_batch_size parameter in the model’s YAML config file, as described below.

Procedure#

To configure microbatching settings in the Cerebras Model Zoo, set the micro_batch_size parameter in the train_input and/or eval_input section of the YAML config.

An example YAML configuration is shown below. A global batch size of “512” will be split between two CS-X systems into a per-box batch size of “256”, and each CS-X will process this via micro-batches of size “64” (that is, in four steps of Gradient Accumulation).

train_input:

batch_size: 512

micro_batch_size: 64

...

run_config:

num_csx: 2

...

You can manually set this micro-batch value or have the Cerebras compiler automatically determine a reasonable value for you via the auto setting, or find a near-optimal performant value via the explore setting. Both automatic options, while convenient, will result in a longer compile time - because of this, we recommend using auto and explore to find a good setting, then manually setting this for subsequent experiments.

The range of settings for micro_batch_size are described in detail below:

auto (default): Automatically choose a reasonable micro-batch size. With this setting, the compiler will drop the model to a smaller micro-batch size if the original batch size per CS-X system does not fit into device memory or the compiler estimates that a lower micro-batch will achieve significantly better samples/second performance. This setting may incur a high compile time due to the search for a satisfactory micro-batch size. Compared to the “explore” setting, “auto” incurs less compile time penalty but can pick sub-optimal micro-batch values. This is the default value if

micro_batch_sizeis not specified.explore: This setting performs an exhaustive search for a near-optimal micro-batch size. Because this mode can take several hours to run, it can only be specified in

compile_onlymode (this mode can be set as an argument to run.py or a yaml parameter). Note that this is different from the “auto” setting of micro_batch_size, which tries to find a reasonable micro-batch size selection without too large an increase of compile time. You can generally expect higher-quality selection of micro-batch size values with"explore"at the expense of a longer compilation run. See Using “explore” to Search for a Near-Optimal Microbatch Size for more information.<positive_int>: You can manually set the explicit micro-batch size you would like the compiler to use. We recommend setting this when you have a good idea of what a performant micro-batch size is, as it will dramatically reduce compile time. Note that setting micro-batch yourself has some restrictions - you must make sure that

batch_size/num_csxis a multiple ofmicro_batch_size- in other words, that the global batch size divided by the number of CS-X systems you are using for a particular job is a multiple of your specified micro-batch size. ARuntimeErrorwill be raised if this condition is not met.none: Disable Gradient Accumulation and use the global

batch_sizeparameter as the micro-batch size. This may result in the model with the given batch size being too large to fit into device memory, in which case compilation will fail. If it does fit, however, the chosen batch size may be suboptimal for performance.

Note

Model performance is a function of the micro-batch size used on a Cerebras system. For example, for a given model a micro-batch of “64” will perform equally well regardless of the values used for num_csx or the global batch_size (as long as batch_size / num_csx is a multiple of the micro-batch size).

Note

The Gradient Accumulation feature will auto-disable for models that it does not support even if micro_batch_size is set. This includes models using batch normalization, or other kinds of non-linear computation over the batch dimension.

Using “explore” to Search for a Near-Optimal Microbatch Size#

In addition to reducing model memory usage, selecting an effective micro_batch_size can significantly improve model performance. However, finding a good micro-batch-size can be a cumbersome process. Cerebras’ Automatic Batch Exploration (CABE) tool provides a convenient way to select the best performing micro-batch-size.

Procedure#

To enable Automatic Batch Exploration, modify the YAML file for your model by setting the following parameters:

1. Set the num_csx and batch_size parameters. These parameters are needed to guide the compiler stack as an initial data point, but their values do not impact the micro-batch-size recommended by the flow. This batch size can be same as the default batch size defined in Model Zoo for the model.

2. Set micro_batch_size to “explore” in the train_input or eval_input section of the YAML file as:

micro_batch_size: "explore"

3. If you have a specific range in mind for acceptable micro-batch sizes, you can define a batch exploration range to limit the search space and get to a set of recommended options more quickly. You can specify this range by providing either one or both of the bounds as follows:

micro_batch_size:

explore:

min: $min

max: $max

4. Finally, launch a compile_only run to start exploration (set compile_only via a run.py argument or as a yaml parameter).

Expected Output#

As the flow explores the micro-batch vs. performance search space, it recommends interim performant micro-batch sizes. This approach saves time and effort while ensuring near-optimal performance. These messages can be found in the run*.log file under local_compile_<train/eval>/model_dir/. Below is a sample of such messages:

2024-02-12 12:20:15 INFO: Current recommended micro_batch_size: 1, estimated performance: 1.00x base, CABE exploration progress: 22%

2024-02-12 12:27:55 INFO: Current recommended micro_batch_size: 2, estimated performance: 1.20x base, CABE exploration progress: 45%

2024-02-12 12:41:56 INFO: Current recommended micro_batch_size: 2, estimated performance: 1.20x base, CABE exploration progress: 67%

2024-02-12 13:40:39 INFO: Current recommended micro_batch_size: 4, estimated performance: 1.23x base, CABE exploration progress: 90%

Each performance estimate for a recommended micro_batch_size is compared relative to the base performance set by the first recommendation. In the example above, line 2, which recommends micro_batch_size: 2, is estimating that this option is likely to provide 1.20x the performance of micro_batch_size: 1, which is used as the baseline for this run of the tool.

After selecting the micro_batch_size, you can select the global batch size as micro_batch_size * num_csx. If you need a specific batch size due to hyper-parameter considerations, you must select a nearby value so long as the implicit per-box batch size, batch_size/num_csx, is evenly divisible by micro_batch_size. After setting batch and micro-batch-size parameters, you may launch either a compile-only, if training is not needed, or a training run.

The batch size recommended by CABE is specific to the current model configuration and may require adjustments if there are any changes to the model’s performance-affecting parameters. For instance, altering the model’s operation to evaluation mode or modifying the hidden size could impact performance. In such scenarios, it’s advisable to rerun CABE to ensure the batch size is optimized for the new configuration.

Effective microbatching examples#

Below is a suggested list of micro-batch sizes that have demonstrated good performance, primarily with GPT-3 models. These sizes can also serve as useful estimates for other similar-sized GPT-style models, such as BLOOM and LLaMA.

Model Family |

Model Size (Params) |

Micro Batch Size (MBS) |

|---|---|---|

GPT-3 |

1.3B |

253 |

GPT-3 |

2.7B |

198 |

GPT-3 |

6.7B |

121 |

GPT-3 |

13B |

99 |

GPT-3 |

20B |

77 |

GPT-3 |

30B |

69 |

GPT-3 |

39B |

55 |

GPT-3 |

65B |

55 |

GPT-3 |

82B |

48 |

GPT-3 |

175B |

35 |

T5 |

3B |

256 |

T5 |

11B |

520 |

Known issues and limitations#

The current known limitations of automatic microbatching include:

Gradient Accumulation has been thoroughly tested mainly with transformer models. The technique is not compatible with models that incorporate batch normalization or layers that execute non-linear computations across batches.

The functionality of Automatic Batch Exploration is confined to transformer models. Attempting to apply it to vision networks, such as CNNs, will result in a runtime error.

To circumvent extended compile times, it’s advisable to directly assign a known effective value to the

micro_batch_sizeparameter instead of leaving it undefined.Enabling Automatic Batch Exploration by setting

micro_batch_sizeto “explore” initiates an exhaustive search, potentially extending over several hours. However, the typical compile time for most GPT models is expected to be around one hour.

Conclusion#

Optimizing Performance with Automatic Microbatching provides a sophisticated approach to enhancing model performance and efficiency, particularly for transformer models. By leveraging microbatching, gradient Accumulation, and Automatic Batch Exploration, users can navigate the constraints of device memory and improve computational throughput. The provided guidelines and parameters offer a structured way to implement these techniques, ensuring models are not only feasible within hardware limitations but also optimized for peak performance. While the process comes with its own set of limitations and considerations—particularly around Gradient Accumulation’s compatibility and the scope of Automatic Batch Exploration—the benefits in terms of performance optimization are substantial. Adopting these strategies can lead to more efficient and effective model training, ultimately accelerating the path to achieving robust and scalable AI solutions.